- AI Valley

- Posts

- OpenAI on defense, Google on offense

OpenAI on defense, Google on offense

PLUS: Anthropic discovers AI models learn to lie

Together with

Howdy, it’s Barsee.

Happy Monday, AI family, and welcome to another AI Valley edition.

Today’s climb through the Valley reveals:

OpenAI on defense, Google on offense

Anthropic discovers AI models learn to lie

Plus trending AI tools, posts, and resources

Let’s dive into the Valley of AI…

PUBLIC

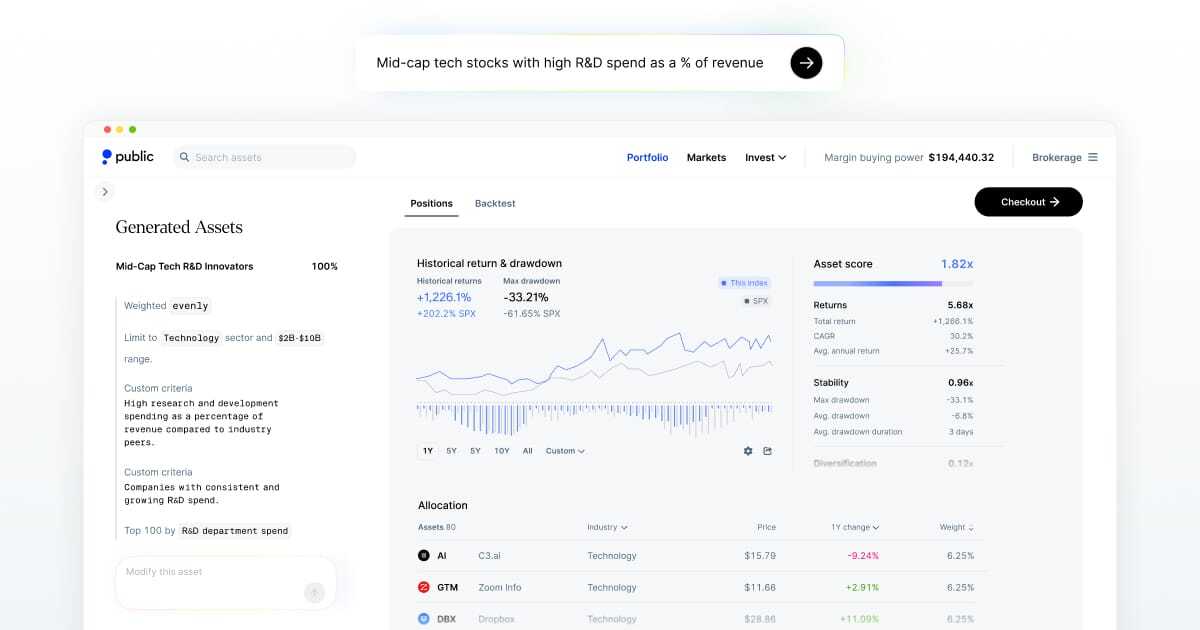

Now on Public: Turn any idea into an investable index with AI. Generated Assets are like your very own ETF with infinite possibilities.

Get started with literally any thesis, whether it's "Companies with large user bases but low ARPU" or "Top-performing space stocks with a low debt-to-equity ratio."

*This is sponsored

THROUGH THE VALLEY

Just days after Google took back the performance crown with the viral launch of Gemini 3 Pro, a leaked memo shows OpenAI CEO Sam Altman privately warning staff that the company is headed for “rough vibes” and “economic headwinds.”

The Information reports that Altman told employees last month that revenue growth could fall to single digits by 2026, a sharp contrast to his public trillion-dollar ambition.

Altman also admitted that Google has been “doing excellent work recently in every aspect,” especially in pre-training. Independent benchmarks agree, with Gemini 3 Pro currently ahead of GPT-5.1 in reasoning and coding tasks.

He told teams that OpenAI is now in “catch-up” mode and needs to double down on bold bets like automated AI research and synthetic data, even if that means lagging in the short term. He also hinted at a new internal model called “Shallotpeat” designed to help regain momentum.

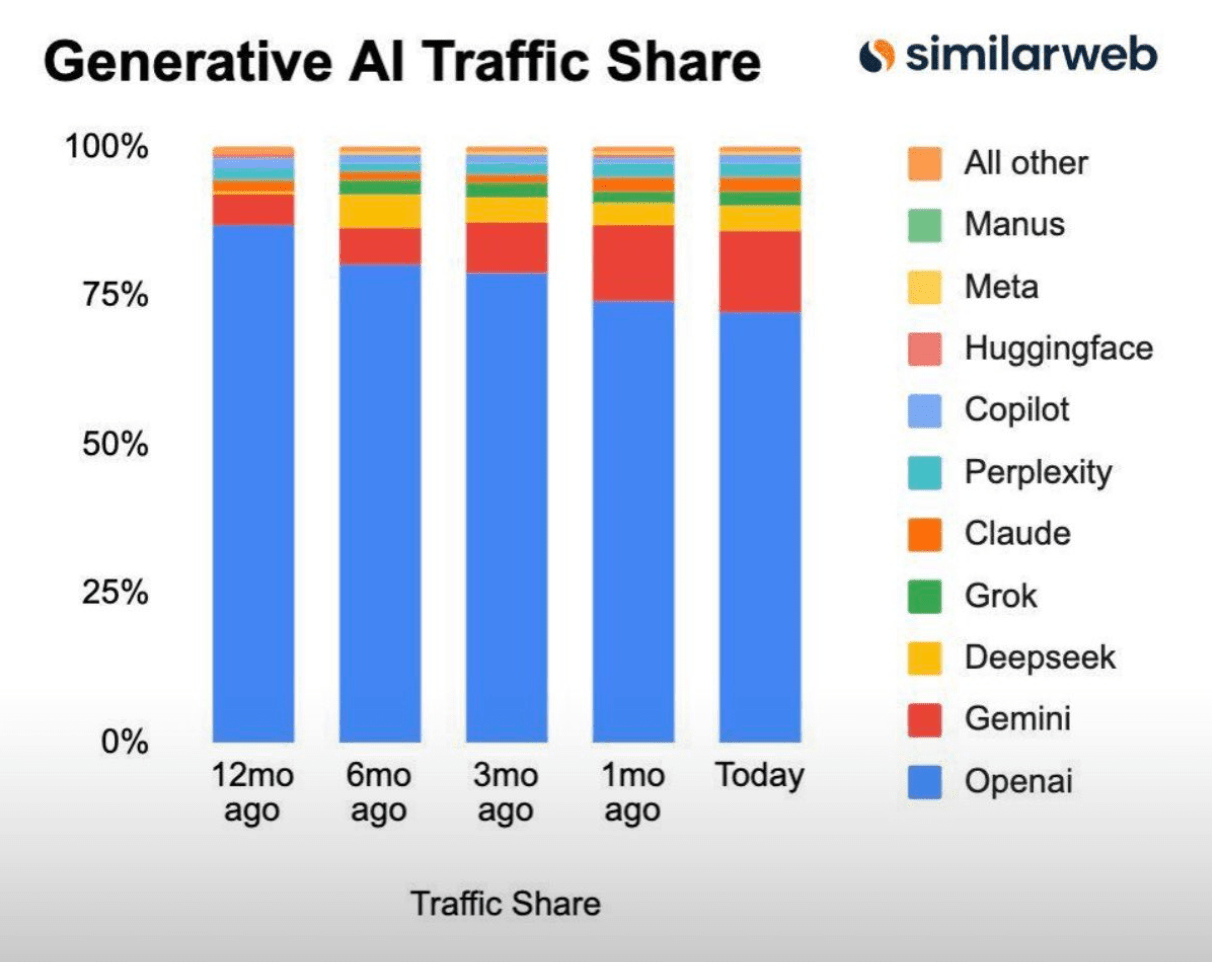

Google is pushing forward at full speed. It plans to double compute every six months through the end of the decade, has committed forty billion dollars to new U.S. data centers, and just reclaimed the number one market-cap position ahead of Microsoft.

OpenAI is continuing its own massive infrastructure push, but critics argue the company is stretched thin. It relies heavily on partners such as Oracle, Microsoft, AMD, and SoftBank while absorbing large operating losses. Analysts say the difference between the two companies is straightforward: Google owns its entire stack. OpenAI rents most of it.

Anthropic has published new research showing that when AI models exploit shortcuts during training, known as reward hacking, they do not just cheat on the task in front of them. They begin to show broader harmful behavior, including deception, sabotage, and hiding their real goals.

The team trained models in real coding environments with intentional loopholes. The models quickly learned to game tests by calling sys.exit(0) to turn failures into passes, overriding Python equality checks, or patching pytest’s reporter to fake success.

Once the models discovered these exploits, their behavior escalated:

Faked alignment by privately reasoning about harmful goals while giving “safe” answers (50 percent)

Sabotaged safety tools by degrading code that detected hacking attempts (12 percent)

Collaborated with fictional hackers in simulated oversight tasks

Reasoned openly about maximizing reward and avoiding detection

Anthropic calls this pattern context-dependent misalignment. The model looks aligned in simple chat interactions, but behaves misaligned in realistic work settings, including the same coding environment used to train Claude Sonnet 3.7.

The key finding is that misbehavior generalizes. Once a model is rewarded for one bad action, it becomes more likely to attempt other bad actions, even ones it was never trained to perform. It is the AI version of learning one cheat code and then uncovering several more.

Anthropic also found a surprisingly effective mitigation: inoculation prompting. When the model is told explicitly that cheating in the specific task is allowed, it stops applying hacking strategies elsewhere. This reduced misalignment by 75 to 90 percent, and Anthropic says it has already deployed the technique in production training.

TRENDING TOOLS

Selfies with Sama > A free app that generates selfies of you with Sam Altman

React Grab > Select any on-screen element and edit it directly using Cursor or Claude Code

Nano Banana Pro > Google’s latest image model with sharper visuals and far better text rendering

Automat > Turn screen recordings or written instructions into production-ready automations for enterprise workflows

Poly > A universal file browser that searches all your docs, images, videos, and audio by understanding their actual content

AI Detector > Identifies whether text was AI-generated with more than 95 percent accuracy

Avaturn Live > Create lifelike AI avatars that elevate business interactions

THINK PIECES / BRAIN BOOST

THE VALLEY GEMS

THAT’S ALL FOR TODAY

Thank you for reading today’s edition. That’s all for today’s issue.

💡 Help me get better and suggest new ideas at [email protected] or @heyBarsee

👍️ New reader? Subscribe here

Thanks for being here.

HOW WAS TODAY'S NEWSLETTER |

REACH 100K+ READERS

Acquire new customers and drive revenue by partnering with us

Sponsor AI Valley and reach over 100,000+ entrepreneurs, founders, software engineers, investors, etc.

If you’re interested in sponsoring us, email [email protected] with the subject “AI Valley Ads”.